Asked By : lululu

Answered By : Martin Berger

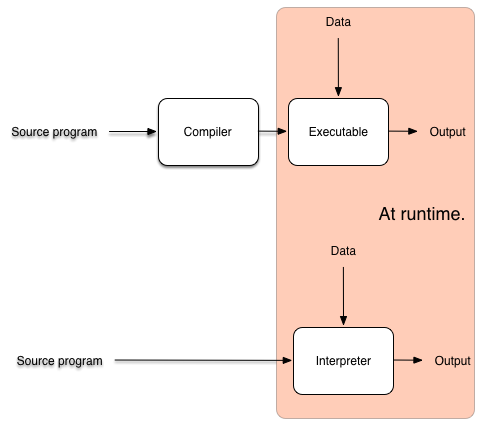

- Interpreter: takes a source program $P$, and runs $P$, that means affecting the world through running $P$.

- Compiler: takes a source program $P$, produces a new program $P_{asm}$. When we run $P_{asm}$, it has the same (hopefully) effect on the world as $P$.

In a picture:  The advantage of compilers is that generated code is faster, because a lot of work has to be done only once (e.g. lexing, parsing, type-checking, optimisation), at compile-time, so is out of the way at runtime. And the results of this work are shared in every execution. The interpreter has to redo this work every time. A problem of AOT compilers is that they often don’t have enough information to perform aggressive optimisation. This information is only available at run-time. A JIT compiler seeks to do better, more aggressive optimisation by executing only at run-time. However, a full compilation cycle is expensive (time-consuming), so shouldn’t be carried out at run-time. How can we deal with this conundrum? The key insight is that program execution time tends to follow the Pareto Principle: the great majority of a program’s execution time is (usually) spent running in a tiny fragment of the code. Such code is referred to as hot. Pareto’s principle tells us that (typically) a program contains some hot code. Think: innermost loops. With the information available at run-time, we can aggressively optimise such hot code, and get a massive speed-up. The rest is interpreted. Sluggishness of interpretation doesn’t matter, because it’s only a fraction of program execution time. There is just one problem … how do we find hot code? Remember, at compile time, the optimiser couldn’t work it out (reliably). How can we do better at run-time? Answer: Let’s use counters! We instrument the interpreter with counters, that increment every time a method is called, or every time we go round a loop. Whenever these counters reach a threshold, we assume that the associated code is hot. We compile that hot code, optimise it aggressively using information available at runtime. The jump to the compiled code, and execute it to completion. When the compiled code terminates, we go back to interpretation. In a picture:

The advantage of compilers is that generated code is faster, because a lot of work has to be done only once (e.g. lexing, parsing, type-checking, optimisation), at compile-time, so is out of the way at runtime. And the results of this work are shared in every execution. The interpreter has to redo this work every time. A problem of AOT compilers is that they often don’t have enough information to perform aggressive optimisation. This information is only available at run-time. A JIT compiler seeks to do better, more aggressive optimisation by executing only at run-time. However, a full compilation cycle is expensive (time-consuming), so shouldn’t be carried out at run-time. How can we deal with this conundrum? The key insight is that program execution time tends to follow the Pareto Principle: the great majority of a program’s execution time is (usually) spent running in a tiny fragment of the code. Such code is referred to as hot. Pareto’s principle tells us that (typically) a program contains some hot code. Think: innermost loops. With the information available at run-time, we can aggressively optimise such hot code, and get a massive speed-up. The rest is interpreted. Sluggishness of interpretation doesn’t matter, because it’s only a fraction of program execution time. There is just one problem … how do we find hot code? Remember, at compile time, the optimiser couldn’t work it out (reliably). How can we do better at run-time? Answer: Let’s use counters! We instrument the interpreter with counters, that increment every time a method is called, or every time we go round a loop. Whenever these counters reach a threshold, we assume that the associated code is hot. We compile that hot code, optimise it aggressively using information available at runtime. The jump to the compiled code, and execute it to completion. When the compiled code terminates, we go back to interpretation. In a picture:  Note that the description above is a simplification, the details are more complicated. Note also that there is no need for the first part of a JIT compiler to be interpreted. In principle, and AOT can emit code that is effectively a JIT-compiler, and the job of the interpreter (evaluation and profiling) is carried out by ahead-of-time compiled machine code.

Note that the description above is a simplification, the details are more complicated. Note also that there is no need for the first part of a JIT compiler to be interpreted. In principle, and AOT can emit code that is effectively a JIT-compiler, and the job of the interpreter (evaluation and profiling) is carried out by ahead-of-time compiled machine code.

Best Answer from StackOverflow

Question Source : http://cs.stackexchange.com/questions/56478 Ask a Question Download Related Notes/Documents